We are excited to announce the latest release of HiddenLayer’s AI Detection & Response (AIDR), a testament to our commitment to providing the industry's most advanced AI security solutions. This latest update introduces new features and enhancements that offer an even more robust, intuitive, and effective cybersecurity for AI experience.

Release Notes for AIDR version 26.1.0 released on January 15, 2026.

The latest Prompt Injection Model improves detection efficacy while reducing false positives when analyzing version-controlled source code and other files from Git repositories, including longer contextual inputs.

Performance optimizations for Compute Unified Device Architecture (CUDA) deployments reduce overall inference latency and improve runtime responsiveness.

Applied security patches addressing emergent vulnerabilities in the runtime security container.

Release Notes for AIDR version 25.12.2 released on December 22, 2025.

Customers can now exclude submitted prompts and LLM responses from INFO-level logging and above, providing greater control over what content is captured in logs.

- Improved data handling and privacy.

- Greater control over logging that helps reduce unnecessary exposure of sensitive prompt or response data.

- See AIDR Container Configuration for more information.

The latest Prompt Injection model improves detection of malicious content embedded within JSON payloads, strengthening protection for applications that rely on structured inputs and outputs.

- Stronger protection for LLM workloads.

- Enhanced detection of malicious JSON-based payloads.

- Improves security for APIs, agents, and tool-driven LLM workflows.

Release Notes for AIDR version 25.12.1 released on December 11, 2025.

The Prompt Injection Classifier v5.2 reduces false positives and improves accuracy on focused prompt injection tactics and techniques described in our APE Taxomony. Learn more at: Introduction a taxonomy of Adversarial Prompt Engineering.

This release also includes an enhanced code-detection engine that eliminates false positives originating from JSON and other structured inputs.

Additional features included in the v5.2 model include:

- A more robust handling of PII management requests.

- A more robust handling of cybersecurity topics which are not prompt injections, or attempts at data and context leakage.

- Lower false positives on code-related questions.

Our Code Detection has been updated to v2.

- Expanded language support.

- Omits detections on data formats, such as JSON, YAML, and XML.

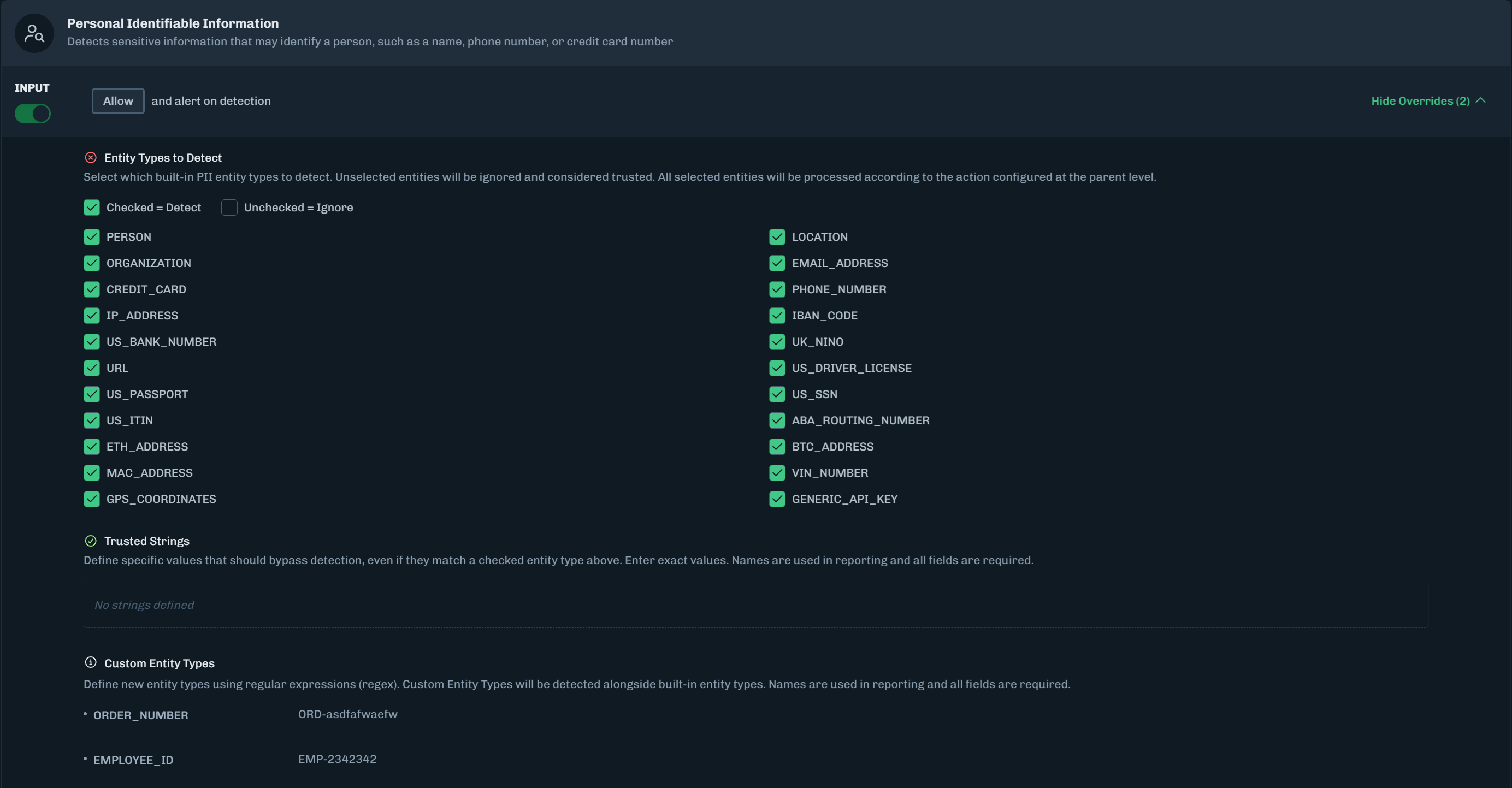

Customers can also now configure PII Overrides directly within the Policy UI in the Console, enabling more flexible and precise policy control.

Release Notes for AIDR version 25.12.0 released on December 1, 2025.

If you use any of the following configurations, please review and update your implementation before upgrading.

- HL_LLM_ENTITY_TYPE=ALL or x-llm-entity-type=all may now return additional entity types that were previously unseen.

- Specifying exclusions (e.g., HL_LLM_INPUT_ENTITY_EXCLUSIONS, HL_LLM_OUTPUT_ENTITY_EXCLUSIONS) may result in newly detected entities due to expanded classification coverage.

To ensure compatibility and avoid unexpected behavior after upgrading:

Replace entity exclusion configurations with the new override-based settings:

- HL_LLM_OVERRIDE_INPUT_PII_ENTITIES and x-llm-override-input-pii-entities

- HL_LLM_OVERRIDE_OUTPUT_PII_ENTITIES and x-llm-override-output-pii-entities

Transition to these new settings before upgrading to v25.12 for a smooth migration experience and improved control over PII entity management.

Beginning in v25.12, HL_LLM_ENTITY_TYPE / x-llm-entity-type is officially deprecated.

This legacy configuration will continue to function for the next three CalVer releases.

It will be removed in a future release once the deprecation window concludes.

Customers should migrate to the override-based PII entity configuration options as soon as possible:

- HL_LLM_OVERRIDE_INPUT_PII_ENTITIES / x-llm-override-input-pii-entities

- HL_LLM_OVERRIDE_OUTPUT_PII_ENTITIES / x-llm-override-output-pii-entities

These newer settings provide clearer, more predictable behavior and align with the direction of our upcoming configuration management UX improvements.

Enhanced how AIDR handles allowed overrides for Prompt Injection.

- Previously, prompts matching an allow override skipped injection detection entirely.

- Now, those prompts are partially redacted and then re-evaluated for injection attempts.

This provides finer control over false positives and a more nuanced security posture for complex prompt scenarios.

When a PII allow override is triggered, API responses now include the names of the matched overrides in the metadata.

This improves observability and makes it easier to understand exactly what’s being allowed or filtered during PII analysis.

We’ve streamlined and modernized our supported entity set for better performance and accuracy.

Removed or replaced entities:

- DOMAIN → use URL

- UK_NATIONAL_INSURANCE_NUMBER → use UK_NINO

- US_ABA_ROUTING_TRANSIT_NUMBER → use ABA_ROUTING_NUMBER

- UK_PASSPORT, US_HEALTHCARE_NPI, NATIONAL_ID — removed with no replacement

Improved detections:

- GPS_COORDINATES and ORGANIZATION now detect correctly

Performance boost:

- The PII detector is now significantly faster when not using complex entities like ORGANIZATION, PERSON, or LOCATION.

Our Prompt Injection Model has been updated to v5.1, bringing improved detection accuracy and resilience against emerging attack techniques.

- The latest model is trained on an expanded, balanced dataset of more than 1 million samples, ensuring strong, real-world performance across a wide variety of prompt manipulation tactics.

- Training sources were informed by HiddenLayer’s Adversarial Prompt Engineering (APE) taxonomy, a structured framework that categorizes known LLM attack patterns. Learn more at: APE Viewer.

This model, first introduced in the 25.10 release and further refined here, delivers our strongest performance to date across internal benchmarks, with significant improvements in both precision and recall for prompt injection detection.

This release includes an update to the Starlette HTTP library to remediate CVE-2025-62727. The issue was limited to the container distribution and did not affect the functionality of the HiddenLayer product.

Release Notes for AISec Platform Console version 25.10.0 released on October 23, 2025.

- Adds a top-level response object to the Interactions API which includes the security action and threat level data based on matching the input analysis to the customer’s security configuration.

- Adds the ability for the user to configure a timeout (in seconds) to the code detector, as well as the ability to configure whether the detector should fail open or closed if a timeout is encountered.

- This release includes bug fixes.

Release Notes for AISec Platform Console version 25.9.0 released on September 30, 2025.

- Interactions API is now generally available in order to provide detailed security analysis for LLM interactions. The API is supported via the SaaS API, the AIDR-G Distribution image, and via the SDK.

- AIDR will now automatically group inferences and detections under a default Project, if no project id or alias is supplied by the user at runtime.

- AIDR now provides out-of-the-box configuration support for retrieving sensitive information from Azure Key Vault.

Release Notes for AISec Platform Console version 25.8.0 released on August 26, 2025.

A new AIDR page for reviewing your detections and easily viewing specific prompts and responses.

- See the new page here (requires a login): AIDR page.

This replaces the Detections / AIDR page in the console.

- Support for more robust formats for model ID, including ARNs.

Release Notes for AISec Platform Console version 25.7.1 released on August 16, 2025.

- Databricks offers integrations with OpenAI models.

- Using AIDR for GenAI, you can add reverse proxy routes for Databricks OpenAI payloads.

- See the AIDR Deployment Guide for more information.

Release Notes for AIDR (LLM Proxy) v25.7.0 released on July 29, 2025.

- New integrations with AWS Secrets Manager.

- Integration with ECS to pull IAM Role Credentials, for easier Bedrock and Sagemaker integrations. The proxy continues to support pulling credentials from the instance as well as the container.

- See the AIDR Deployment Guide for more information.

Release Notes for AIDR (LLM Proxy) v25.6.0 released on June 26, 2025.

The AIDR model version 5

- Provides protection against TokenBreak attacks. TokenBreak attacks can bypass safety features and guardrails for large language models (LLMs).

- Significantly lower false positives across the board.

- Improved true positive rate for non-English languages.

- Customers can now create projects to represent unique AI applications or use cases.

- Projects can use a custom ruleset to configure AIDR detectors, rather than using one universal ruleset for all use cases.

- The Sandbox in the Console now provides historical chat context and more sample prompts.

Release Notes for AIDR (LLM Proxy) v25.5.2 released on May 29, 2025.

Remotely manage detection configuration from the console, rather than requiring configuration via environment variables or headers.

- Detection configuration for Prompt Injection, DDOS, PII, and Guardrails are supported.

- Headers sent in the request can override remote rules if the local configuration is set to allow header overrides.

- Once a default ruleset is established, it will override any conflicting local configurations.

- Rulesets are polled for changes once a minute, so changes should propagate to your deployment in less than five minutes.

- Releasing the V4.5 Prompt Injection model, with significantly higher performance on English and other supported languages (German, Spanish, Korean, Japanese, French, and Italian).

Release Notes for AIDR (LLM Proxy) v25.3.1 released on April 3, 2025.

- Mistral models are now supported in Amazon Bedrock.

- Quick Scan now scans the full input, where previously it scanned just the first 512 tokens. It doesn't do a second scan with non-alphanumeric characters stripped. As a consequence, long prompts will have higher latency with Quick Scan.

- Full Scan does the same but also does a second scan with non-alphanumeric characters stripped.

Release Notes for AIDR (LLM Proxy) v25.3.0 released on March 13, 2025.

- For the LLM Sandbox in the Console, 2025 has been added to the OWASP Scenario. Example: LLM01: 2025 Prompt Injection.

- For AI Detection & Response, the prompt analyzer returns owasp_2025 in the response body.

A new version of the prompt injection detection classifier with an emphasis on language translation support.

Release Notes for AIDR (LLM Proxy) v25.2.0 released on February 19, 2025.

AI Detections & Response can utilize a GPU to improve throughput and performance.

Refusals can now be detected based on the language in outputs. Turning on this feature (configurable) allows customers to detect refusals caused by custom system prompts, rather than the generic set of safety guardrails established by upstream LLM providers.

For reverse proxy requests (i.e., passthrough requests), Proxy will now propagate upstream errors back to the requester instead of responding with a HTTP 502 or HTTP 500. SageMaker and Bedrock will continue always responding with HTTP 502 for upstream errors.

Release Notes for AIDR (LLM Proxy) v25.1.0 released on January 21, 2025.

A single AIDR instance can now route traffic to multiple AWS Bedrock instances with different credentials.

Release Notes for AIDR (LLM Proxy) v24.12.0 released on December 17, 2024.

Increased efficacy on Japanese and Korean prompts.

Release Notes for the AIDR (LLM Proxy) v24.10.3 released on November 26, 2024.

A new prompt injection detection for AIDR, Control tokens, identifies when a prompt contains specific control tokens known to confuse LLMs and allow a malicious user to subvert their instructions.

AIDR now supports Guardrail Detection for Gemini, as well as OpenAI and models hosted on Azure. Guardrail detection works by deterministically passing through the field set by the LLM provider, allowing a guardrail event to be added into security workflows. Anthropic does not offer a guardrail field today, so AIDR does not support guardrail detection for Anthropic models.

AIDR supports AWS instance profiles for authentication to Sagemaker/Bedrock.

Release Notes for the AIDR (LLM Proxy) v24.10.0 released on October 15, 2024.

Released a new version of the model with higher accuracy.

Release Notes for the AIDR (LLM Proxy) v24.9.1 released on September 23, 2024.

he AIDR SIEM logs now provide a persistent event_id.

Release Notes for the AIDR (LLM Proxy) v24.9.0 released on September 17, 2024.

The Prompt Analyzer now offers full AIDR functionality, allowing customers running AIDR to check the input and output safety flexibly throughout their application.

Enhancements to AIDR code detections to improve security and performance.

This release of AIDR reduces compute usage.

Release Notes for the AIDR (LLM Proxy) v24.8.1 released on September 3, 2024.

This allows users who require that their pods cannot run as root to install AIDR.

This release also includes performance improvements and bug fixes.

Release Notes for the AIDR (LLM Proxy) v24.8.0 released on August 20, 2024.

AIDR now supports unmapped route passthrough, sending your request to the generative AI provider, regardless of whether the endpoint is directly supported. This new, optional global policy setting in the LLM Proxy provides a seamless user experience.

Release Notes for the AIDR (LLM Proxy) v24.7.0 released on July 23, 2024.

This release also includes performance improvements and bug fixes.

Release Notes for the AIDR (LLM Proxy) v24.6.0 released on June 20, 2024.

This release includes three new global policy settings to improve the user experience.

- Include Block Message Reasons - When enabled, includes the block message reasons in the response.

- Proxy Enabled Passthrough Streaming - When enabled, the proxy will immediately start streaming the response back to the requester. Currently available for OpenAI and Azure OpenAI.

- Max Request Size - The maximum size for a request or a response, in bytes.

Modified and improved code detections in the analyzer.

When a modality restriction detection occurs, the LLM Proxy includes the MITRE ATLAS ID.

The following items have been resolved for this release.

- Proper handling of PII redaction when a context window is set to LAST.

- Added support for OpenAI chat completion functions.

- Resolved an issue with reverse-proxy routes for Sagemaker and Bedrock. Updated the LLM Proxy Deployment Guide with examples for Sagemaker and Bedrock.