When AIDR is deployed in Hybrid mode, metadata per inference will be sent back to HiddenLayer to power the UI and provide visualizations for users to review.

EXPAND to see a table listing the data that is sent.

| Type | Description |

|---|---|

| Inference Event | Metadata about the inference.

|

| Inference Detection | If a detection was found during the inference.

|

When AIDR is deployed in Disconnected mode, no data is sent back to the HiddenLayer AISec Platform.

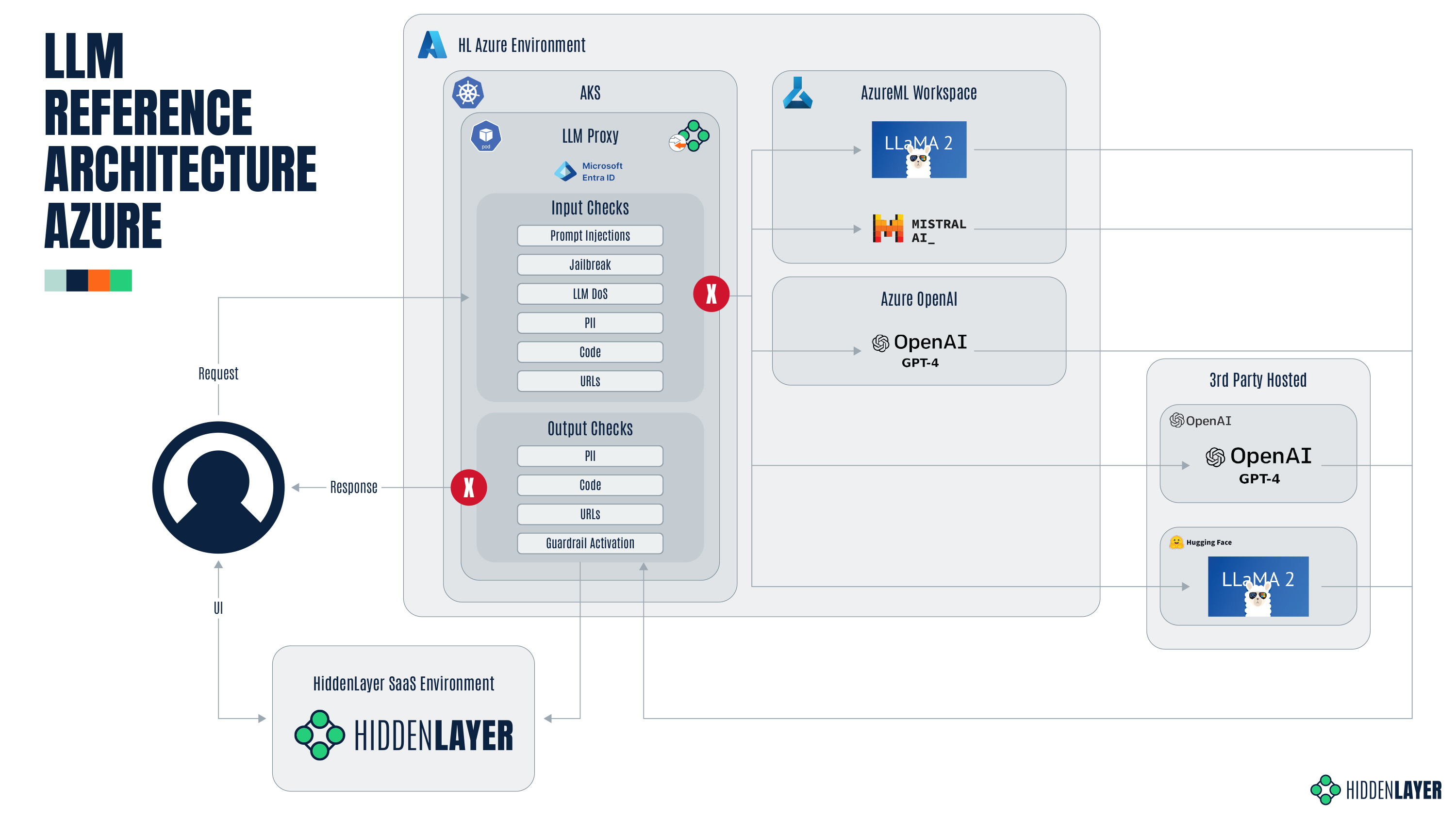

Below is an example reference architecture for an Azure deployment using Kubernetes (AKS).

With the AIDR LLM proxy in place and configured:

Input Checks: When a request is submitted, AIDR blocks requests that trigger an input check.

- In the image below, this is the red X between the LLM Proxy Input Checks and Models (AzureML Workspace, Azure OpenAI, 3rd Party Hosted).

Output Checks: When a response is provided by the LLM, AIDR blocks the responses that trigger an output check.

- In the image below, this is the red x between the LLM Proxy Output Checks and the Requestor.